Best Database for Big Data: Big data has become the lifeblood of modern enterprises, powering decisions, insights, and innovations. As businesses grapple with vast amounts of data, choosing the right database becomes paramount.

In this article, we delve into the intricacies of finding the best database for big data, considering various factors and real-world applications.

Best Database for Big Data: Navigating the Digital Ocean

I. Introduction

A. Definition of Big Data

Big data refers to the massive volume of structured and unstructured data generated by businesses daily. This data holds valuable insights that, when properly analyzed, can drive business growth and efficiency.

B. Importance of Databases in Big Data

Databases are the backbone of big data systems, providing a structured framework to store, manage, and retrieve information. The choice of a database significantly impacts the efficiency of handling big data.

II. Characteristics of a Good Database for Big Data

A. Scalability

The ability to scale horizontally and vertically is crucial in handling the exponential growth of data. A good database should seamlessly accommodate an increase in both data volume and user load.

B. Performance

Efficient data retrieval and processing are vital for the success of big data applications. A high-performing database ensures quick access to information and speedy analytics.

C. Flexibility

Big data comes in various forms, from traditional structured data to semi-structured and unstructured data. A flexible database can adapt to different data types and structures.

D. Security

With the rise of cyber threats, ensuring the security of sensitive data is paramount. The best databases for big data incorporate robust security measures to protect against unauthorized access and data breaches.

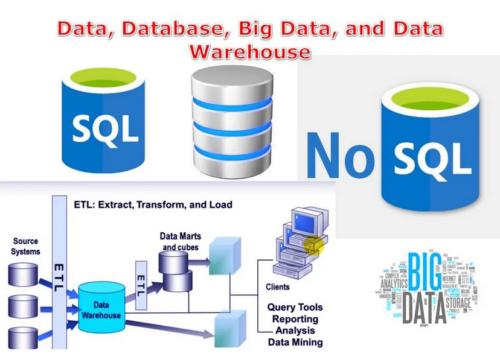

III. Types of Databases for Big Data

A. Relational Databases

Traditionally used for structured data, relational databases like MySQL and PostgreSQL are known for their data integrity and consistency.

B. NoSQL Databases

Designed for unstructured and semi-structured data, NoSQL databases such as MongoDB and Cassandra excel in handling diverse data types.

C. NewSQL Databases

These databases combine the best of both worlds, providing the scalability of NoSQL and the ACID compliance of relational databases.

D. In-Memory Databases

Storing data in RAM allows for lightning-fast data access, making in-memory databases like Redis ideal for real-time analytics.

IV. Comparison of Popular Databases

A. MongoDB

MongoDB, a NoSQL database, excels in handling large volumes of unstructured data and is highly scalable. Its document-oriented structure facilitates flexibility.

B. Cassandra

Built for scalability and fault tolerance, Cassandra is a NoSQL database that powers many high-traffic websites. Its decentralized architecture ensures robust performance.

C. Amazon DynamoDB

A managed NoSQL database service, DynamoDB, offers seamless scalability and low-latency performance. It’s a popular choice for cloud-based applications.

D. Apache HBase

HBase, part of the Hadoop ecosystem, is a distributed, scalable, and consistent NoSQL database. It is well-suited for real-time, read and write-intensive workloads.

V. Factors to Consider When Choosing a Database

A. Data Volume

Consider the size of your data. Relational databases may suffice for smaller datasets, but as data grows, NoSQL or NewSQL databases may be more suitable.

B. Data Variety

If your data includes diverse formats like text, images, and videos, opt for a database that supports varied data types.

C. Data Velocity

For applications requiring real-time processing, choose a database that can handle high data velocity, such as in-memory databases.

D. Cost

Evaluate the total cost of ownership, considering factors like licensing, hardware, and maintenance. Some databases may offer cost-effective solutions for specific use cases.

VI. Challenges in Handling Big Data

A. Data Storage

The sheer volume of big data poses challenges in storage. Choosing a database with efficient data compression and storage optimization is essential.

B. Data Processing

Processing large datasets in real-time demands powerful processing capabilities. Ensure your chosen database can handle the processing demands of your application.

C. Data Analysis

Extracting meaningful insights from big data requires advanced analytics capabilities. Choose a database with built-in analytics tools or seamless integration with analytics platforms.

VII. Case Studies

A. Netflix’s Use of Apache Cassandra

Netflix relies on Apache Cassandra to manage its vast catalog and user data. The database’s scalability and fault tolerance align with Netflix’s need for a high-performance system.

B. Facebook’s Use of MySQL and HBase

Facebook utilizes MySQL for structured data and HBase for semi-structured data. This hybrid approach allows them to handle the diverse data generated by billions of users.

C. Uber’s Use of Redis

Uber employs Redis for real-time data processing, enhancing its ride-matching algorithms. The in-memory database provides the speed and responsiveness required for dynamic decision-making.

VIII. Best Practices in Utilizing Big Data Databases: Maximizing Efficiency and Performance

In the ever-evolving landscape of big data, leveraging databases efficiently is paramount to extracting meaningful insights and maintaining optimal performance.

This section outlines best practices to guide businesses in navigating the complexities of big data databases and ensuring their systems operate at peak efficiency.

A. Proper Indexing

– Understanding Query Patterns

Efficient indexing begins with a deep understanding of query patterns. Analyze the typical queries your database will face and structure indexes accordingly. This strategic approach significantly accelerates data retrieval.

– Regular Index Maintenance

Indexing is not a one-time task. Regularly review and update indexes as the data evolves. This proactive maintenance ensures that indexes remain aligned with the changing patterns of data access.

B. Data Partitioning

– Distributing Data Across Nodes

To enhance scalability and minimize bottlenecks, distribute data across multiple nodes. This parallel processing approach enables databases to handle increased loads seamlessly, ensuring optimal performance during peak times.

– Partitioning Strategies

Choose appropriate partitioning strategies based on the nature of your data. Whether range-based, hash-based, or list-based, the partitioning strategy should align with the characteristics of your dataset for optimal results.

C. Regular Maintenance

– Data Cleanup

Perform routine data cleanup to eliminate unnecessary or redundant information. This practice not only conserves storage space but also contributes to faster data retrieval and improved overall database efficiency.

– Optimization Tasks

Regularly conduct optimization tasks, including defragmentation and compression. These tasks help in reclaiming storage space, reducing latency, and ensuring that the database operates smoothly over time.

Incorporating these best practices into your big data database management strategy can make a substantial difference in the efficiency and performance of your system. By focusing on proper indexing, strategic data partitioning, and regular maintenance, businesses can unlock the full potential of their big data databases, ensuring a robust and responsive data infrastructure.

IX. Future Trends in Big Data Databases

As we stand at the crossroads of technological advancement, the future of big data databases promises exciting developments that will reshape how we store, process, and extract insights from vast amounts of information.

In this section, we explore the emerging trends that are set to define the landscape of big data databases in the years to come.

A. Machine Learning Integration

– Real-time Decision-Making

The integration of machine learning algorithms within big data databases is poised to revolutionize real-time decision-making. By analyzing patterns and trends on the fly, databases will empower businesses to make instantaneous, data-driven choices, enhancing operational efficiency and responsiveness.

– Predictive Analytics

Machine learning’s predictive capabilities will be seamlessly woven into the fabric of big data databases. This integration will enable businesses to anticipate future trends, identify potential challenges, and proactively adapt their strategies, ultimately gaining a competitive edge in dynamic markets.

B. Blockchain and Big Data

– Enhanced Security

The marriage of blockchain technology and big data databases promises enhanced security measures. The decentralized and tamper-resistant nature of blockchain can safeguard the integrity of stored information, providing a robust defense against unauthorized access and data breaches.

– Transparency and Accountability

Blockchain’s transparency features will bring a new level of accountability to big data systems. Every transaction and modification to the database will be recorded in an immutable ledger, fostering trust among users and stakeholders.

C. Edge Computing and Databases

– Low-Latency Processing

The proliferation of edge computing will drive the development of databases optimized for low-latency processing. By decentralizing data processing to the edge, businesses can achieve faster response times, particularly critical for applications requiring real-time analytics and decision-making.

– Edge-Based Data Storage

Big data databases will evolve to incorporate edge-based data storage solutions. This approach ensures that relevant data is stored and processed closer to the source, reducing latency and bandwidth usage, particularly beneficial for applications in remote or resource-constrained environments.

X. Conclusion

In the ever-expanding landscape of big data, choosing the right database is a critical decision. The best database for big data depends on factors such as scalability, performance, and data variety. By considering these factors and learning from real-world case studies, businesses can make informed decisions to harness the power of their data.